TL;DR: Whisper.cpp is the fastest when you’re trying to use the large Whisper model on a Mac. For top-quality results with languages other than English, I recommend to ask model to translate into English.

About Whisper

Whisper is OpenAI’s speech-to-text model and it’s well-known for its impressive results. Although I knew about it for a while, I didn’t get to test its real-world performance until recently. So, I spent a weekend seeing how it could handle converting speeches, in both English and other languages, into text.

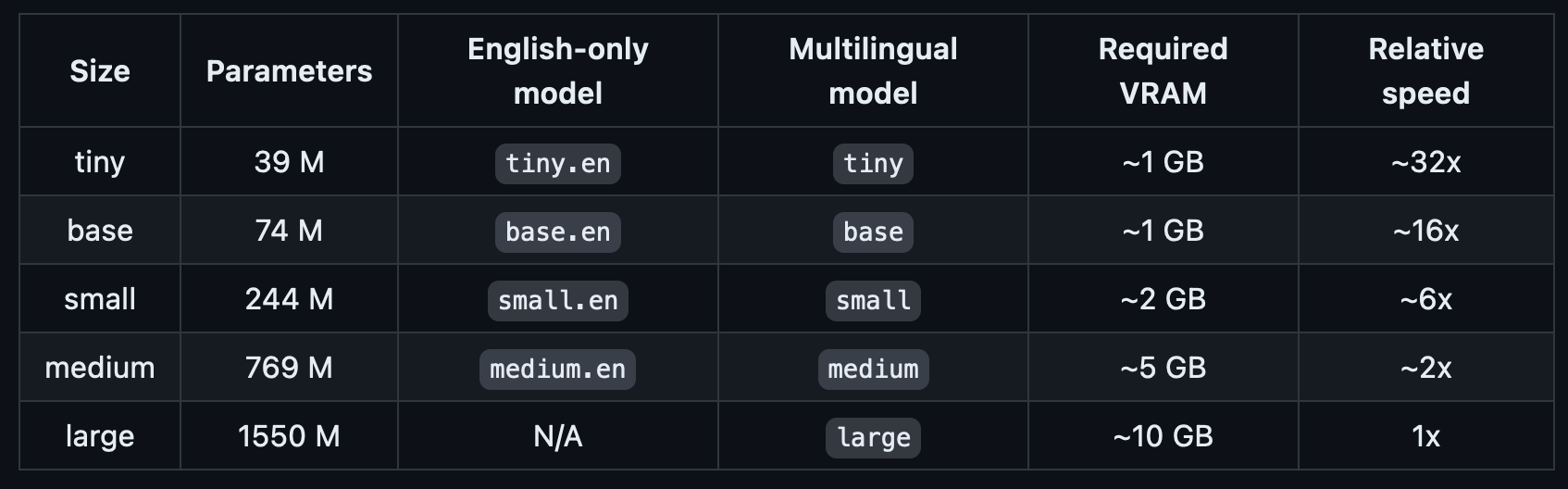

Whisper comes in different sizes and two types. The smallest one (tiny) is really fast but not so accurate, while the largest one (large) is slower but way more precise. Some people have found that the smaller versions work fine for most English speeches, but I was interested in seeing if it could handle less common languages like Persian as well.

Whisper’s performance varies widely depending on the language and this figure

shows a WER (Word Error Rate) breakdown by languages using the large-v2 model (The smaller the numbers, the better the performance).

Voice Files

I usually use Telegram for messaging, so I had many voice messages saved there. I picked a few for this experiment. I also used the first minute of sound from two English and two Persian YouTube videos. If you want to try this experiment yourself, you can download these files here and here .

Attempting Hugging Face Transformers and Google Colab

At first, I tried to use Google Colab for this experiment so I wouldn’t have to install anything on my laptop. I came across the Whisper model card on hugging face

and tried installing the transformers and loading the model on a Google Colab notebook. The results of the tiny and small models weren’t good for Persian voice, and the medium model caused the Colab session to restart due to insufficient memory, meaning I couldn’t use Google Colab for this experiment.

Also, I found the Hugging Face transformers to be somewhat complex, especially when compared to OpenAI’s python library for Whisper, which seemed much more straightforward. So, I decided to switch over and install this library on my laptop to continue the experiment there.

Experimenting with Whisper Python Library on Macbook

My 8GB RAM Macbook Air couldn’t handle the medium and large models, so I switched to my Macbook Pro for a more intensive experimentation. The Whisper python library

is quite user-friendly. After installation:

pip install -U openai-whisper

And ensuring ffmpeg is installed:

brew install ffmpeg

It’s as easy as a few lines of code:

import whisper

model = whisper.load_model("large")

result = model.transcribe("english.wav")

print(result["text"])

It took 60 seconds to transcribe a 60-second English voice file into:

" Hey, what’s up? I’m Kabeh HD here. Okay, I’m gonna make myself sound really old here just for a second. But about 10 years ago, there was a vibrant ecosystem of a ton of different tablets. There’s all kinds of tablets. There was like iPads versus Android tablets, all these fights going on. There were small screen tablets, big screen tablets, and all the Android tablets were sort of anchored by Google’s family of Nexus tablets. So we had the smash hit Nexus 7 and the cult classic Nexus 10. But then Google kind of got a little bit lost with Android tablets and Chrome OS tablets a little bit in there for a bit, and they made a few bad ones in a row, and then they just gave up. Like they literally just gave up, stopped making tablets. But something else that’s picked up a whole bunch of steam since then is a new product category, smart home displays. So Google bought Nest, and we’ve seen this Nest Hub, and the Nest Hub Max came out, and this is a growing category."

And 109 seconds for a 60 seconds Persian voice file to turn into:

’ سلام من حسام هستم و به قسمت 8 از منجوری لذت اکاسی خوش اومدید توی این قسمت میخوایم راجب اکاسی قضا با هم صحبت بکنیم و یه سیری راه کارها رو با هم داشته باشیم که به تون کمک می کنن اکسهای بهتری را از اون قضاهایی که درست می کنید یا برتون سرف می شد و داشته باشین همراه من باشین ممنبع نوری اولین نکته برای داشتن اکسهای حرفه ای از قضا منبع نوریه خب اگر دست رسیب امکاناتی ویژه مثل نورهای استودیوی ندارید باید دقیق بکنید که از منبع نور طبیعی مثل پنجره استفاده بکنید اینجوری نتائجتون واقعا شگفتنگیز می شه این اکس رو می بینید این با نور پنجره سبت شده به اصلی گوشی و می بینید که چون منبع نوری مود به صورت طبیعیه خیلی نور خوبی متوجه قضا شده و قضا قشنگ'

The English transcription was excellent. As for the Persian translation, I found it somewhat lacking. There were so many typos in the text, and if you were to use it as the subtitle in a video, it would come off as embarrassing. The model does seem to accurately pick up on individual sounds, both vowels and consonants. However, when it attempts to assemble these sounds into a complete word, the results often don’t form valid words in Persian. For example, it heard the word “akkasi” which is the English spelling for the Persian word meaning “photography” (عکاسی), but it transcribed it as اکاسی. This isn’t a valid word in Persian, but if it were, it would also be pronounced as “akkasi.”

Trying whisper.cpp

Looking for a faster way to get the transcriptions, I found that whisper.cpp was much quicker than the Python library for the larger models. To use whisper.cpp, you first need to clone the project, then download a Whisper model:

bash ./models/download-ggml-model.sh large

Then simply run:

./main -m models/ggml-large.bin -f samples/english.wav --output-txt

Make sure your voice file is a 16khz wav, and adjust the location as needed. The --output-txt part gives you a text file of the transcript. Transcribing the English voice took 22 seconds, which is three times faster than the Whisper python library.

For languages other than English, you can add -l <language> to the command:

./main -m models/ggml-large.bin -f samples/persian.wav --output-txt -l fa

This one took about 27 seconds, which is four times faster than the Whisper Python library.

Here’s the interesting part: if you want an English version of a non-English voice file, you can add a -tr flag to the command:

./main -m models/ggml-large.bin -f samples/persian.wav --output-txt -l fa -tr

This command translated the Persian voice to the following English text in just 19 seconds!

Hi, I’m Hesam and welcome to the 8th episode of the “Taste of Photography” series. In this episode, we want to talk about food photography and have some guides with us to help you get the best photos of the foods that you make or serve. Stay with me. [Intro] The first tip for taking professional photos of food is a light source. If you don’t have access to special facilities like studio lights, make sure to use natural light sources like a window. This way, your photos will be more attractive. This photo is taken with a window light. As you can see, the natural light source is a very good light source. The food is very tasty.

The result is quite impressive and more practical than the Persian transcription. It is possible that the higher quality of the translated Persian transcript results from more translation hours in training datasets (see appendix E in the Whisper’s paper ). So, if you’re dealing with non-English speeches and you’re not concerned about presenting the output to users, it’s more effective to translate and use the English text.