In the world of data, textual data stands out as being particularly complex. It doesn’t fall into neat rows and columns like numerical data does. As a side project, I’m in the process of developing my own personal AI assistant. The objective is to use the data within my notes and documents to answer my questions. The important benefit is all data processing will occure locally on my computer, ensuring that no documents are uploaded to the cloud, and my documents will remain private.

To handle such unstructured data, I’ve found the unstructured

Python library to be extremely useful. It’s a flexible tool that works with various document formats, including Markdown, , XML, and HTML documents.

Starting with unstructured

You can easily install the library by:

pip install unstructured

Loading and partitioning a document

The first thing you’ll want to do with your document is split it up into smaller parts or sections. This process, called partitioning, makes it easier to categorize and extract text.

Here’s how you do it:

from unstructured.partition.auto import partition

elements = partition(filename="example-docs/note.md")

example-docs/note.md:

## My test title

And here is a sample text.

When we partition a document, the output is a list of document Element objects. These element objects represent different components of the source document. The unstructured library supports various element types including Title, NarrativeText, and ListItem. To access the element type you can use the category method:

for element in elements:

print(f"{element.category}:")

print(element)

print("\n")

Output:

Title

My test title

NarrativeText

And here is a sample text.

The list of document elements can be converted to a list of dictionaries using the convert_to_dict function:

from unstructured.staging.base import convert_to_dict

dict_data = convert_to_dict(elements)

Output:

[{'type': 'Title',

'coordinates': None,

'coordinate_system': None,

'layout_width': None,

'layout_height': None,

'element_id': 'a3114599252de55bea36c288aa9aa199',

'metadata': {'filename': 'sample-doc.md',

'filetype': 'text/markdown',

'page_number': 1},

'text': 'My test title'},

{'type': 'NarrativeText',

'coordinates': None,

'coordinate_system': None,

'layout_width': None,

'layout_height': None,

'element_id': '6e78562ede477550604528df644630e8',

'metadata': {'filename': 'sample-doc.md',

'filetype': 'text/markdown',

'page_number': 1},

'text': 'And here is a sample text.'}]

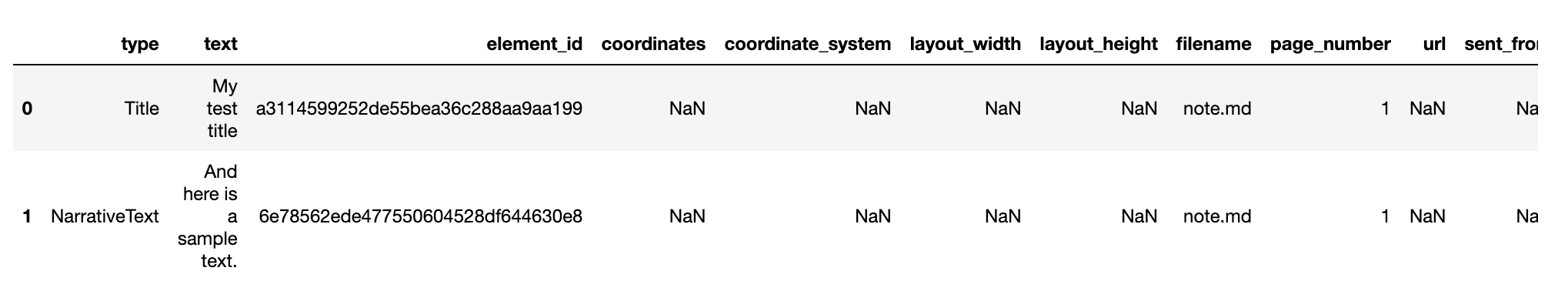

But since I want to store the chunks of texts in a database and do some exploratory analysis with the data, I used convert_to_dataframe function to convert the text elements into pandas dataframe:

from unstructured.staging.base import convert_to_dataframe

df = convert_to_dataframe(elements)

Gettng the metadata

One neat feature of the unstructured library is how it keeps track of various metadata about the elements it extracts from documents. For example, you might want to know which elements come from which page number. You can extract the metadata for a given document element like so:

doc_metadata = elements[0].metadata.to_dict()

print(doc_metadata)

Output:

{'filename': 'note.md', 'filetype': 'text/markdown', 'page_number': 1}

All document types return the following metadata fields when the information is available from the source file: filename, file_directory, date, filetype, andpage_number.

Preparing for Transformers

When you’re ready to feed your text into a transformer model for further processing, you can use the stage_for_transformers function. This function prepares your text elements by splitting them into chunks that fit into the model’s attention window.

In the following example, I’m using a library called SentenceTransformers (I’ve written more about using this library in my previous blog post

):

from sentence_transformers import SentenceTransformer

from unstructured.staging.huggingface import stage_for_transformers

model = SentenceTransformer("all-MiniLM-L6-v2")

chunked_elements = stage_for_transformers(elements, model.tokenizer)

And now I can load all the notes in a specific directory, so I can convert them to embedding vectors later:

all_elements = []

root_dir = '/corpus'

for directory, subdirectories, files in os.walk(root_dir):

for file in files:

full_path = os.path.join(directory, file)

all_elements += partition(filename=full_path)

Limitations of unstructured

This library has some issues and limitations as well.

- When loading and parsing

docxfiles, it can’t properly recognize bullet points asListItemand most of the times labels them asNarrativeTextorTitle. This makes theTitlerecognition unreliable as well, since when you look into the output, you can’t tell for sure if eachTitleis actually a title or a list item labeled incorrectly as aTitle. (issue on github ) - When working with large documents, there isn’t any way to know what are the parents of each paragraph or title. This could be a very useful feature to have, specially when feeding back the data to an LLM. (issue on github )

Alternatives

After playing with unstructured I tried to see if there are better alternatives for reading documents with python. Although I will need to load documents with various formats, I narrowed down my search to first find alternatives for reading docx files first (as this it the format you get when downloading a large folder of documents from Google Drive). Here are what I found:

python-docx

- It seems powerful, but it’s complicated to work with.

- I tried loading and parsing a few

docxfiles. The biggest issue I experienced was with loading any text that includes hyperlinks. For some unknown reason, the texts of hyperlinks are returned empty in the final output. This makes it unusable for my purpose, since the link texts provide valuable information in the text. - Pro: It is able to provide the heading level info for titles (as

Heading 1,Heading 2, etc).

docx2txt

- It uses

python-docxunder the hood. - Only returns a giant full text string of the loaded document. This would require me to split my documents to meaningful chunks, which is not a trivial task to do.

- Pro: It doesn’t have any problems with hyperlinks and the output text is readable and useful.

- Pro: It is also very easy to use.

simplify_docx

- It works on top of

python-docx. - This library basically converts the complicated output of

python-docxto a more easy to use json output. - It also have the same issue with hyperlinks and returns empty texts when there is a link in the paragraph.

So I will continue using unstructured for now. It’s worth mentioning that, yes, this could be accomplished more easily using LangChain

or other similar tools. However, part of my motivation in building this personal AI assistant is the learning journey. By using unstructured to load documents and other similar tools for embeddings and so on, I’m gaining a deeper understanding of the underlying processes, rather than using a one-stop-shop solution like LangChain.

I’ll be sharing more about the progress I’m making in building this personal AI assistant in future posts, so stay tuned.

Update 2023-07-07:

Using pandoc

Raphael

suggested using pandoc with to extract the texts from documents. I wasn’t familiar with zpandacz and tried playing with it and tweaked Raphael’s suggestion and was able to get plaintext from docx files using:

from sh import pandoc

plaintext_output = str(pandoc("path_to_docx_file.docx", to="plain"))

This returns a very clean plaintext from any document, so you can give it a markdown or any other text file and get the same plain text. I just need to remove th footnote references (currently shown as [1], [2], etc in the plaintext output) to get an actual plaintext, but I think this is as good as we can get with extracting text from docx and markdown files.